EFFDNet: A Scribble-Supervised Medical Image Segmentation Method with Enhanced Foreground Feature Discrimination

MICCAI 2025 Conference Poster

Abstract

EFFDNet introduces a novel approach to scribble-supervised medical image segmentation with enhanced foreground feature discrimination. Traditional fully-supervised segmentation methods require pixel-level annotations, which are time-consuming and expensive to obtain in medical imaging applications.

Our method leverages weak supervision from scribble annotations to achieve competitive segmentation performance while significantly reducing annotation burden. The key innovation lies in the enhanced foreground feature discrimination mechanism that effectively separates foreground and background features in the learned representation space.

EFFDNet demonstrates superior performance on standard medical image segmentation benchmarks, offering an efficient solution for medical image analysis with minimal supervision requirements.

Key Contributions

- Scribble-Supervised Learning: Novel framework that achieves high-quality segmentation with minimal scribble annotations

- Enhanced Foreground Feature Discrimination: Innovative mechanism to improve separation between foreground and background features

- Efficient Weakly-Supervised Approach: Significant reduction in annotation requirements while maintaining segmentation quality

- Medical Image Focus: Specifically designed and optimized for medical imaging applications

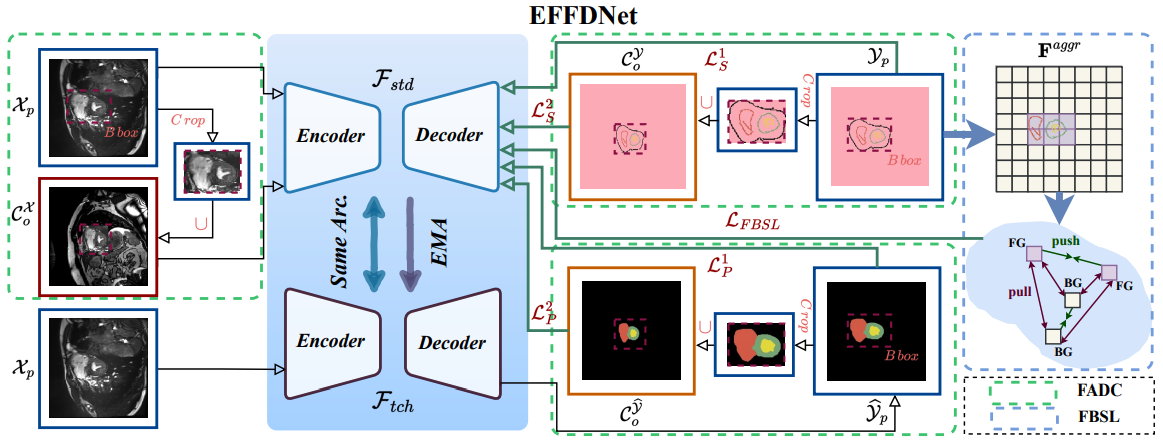

Method Overview

EFFDNet employs a deep learning architecture specifically designed for scribble-supervised segmentation. The approach leverages the inherent foreground-background semantics in scribble annotations to guide the learning process.

The framework incorporates the following key components:

- Scribble-Based Supervision: Utilizes sparse scribble annotations to guide the learning process

- Feature Discrimination Module: Enhanced mechanism to distinguish foreground from background features

- Weakly-Supervised Training: Training strategy that maximizes the information from limited supervision

- Medical Image Optimization: Architecture specifically optimized for medical imaging characteristics

The framework leverages advanced feature learning techniques to achieve robust segmentation performance with minimal supervision, making it particularly suitable for medical imaging applications where obtaining dense annotations is challenging.

Technical Requirements

Environment Setup

- Python: 3.7 or higher

- PyTorch: >=0.4.1

- Additional Dependencies: TensorBoardX, Efficientnet-Pytorch

- Supporting Libraries: Numpy, Scikit-image, SimpleITK, Scipy

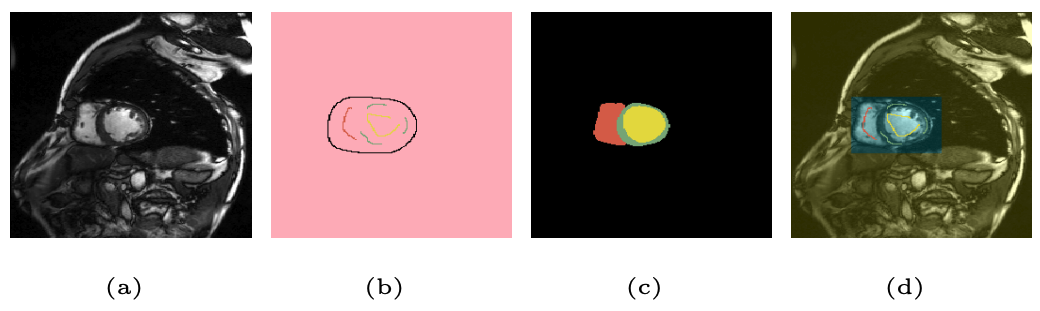

Datasets

- ACDC Dataset: Automated Cardiac Diagnosis Challenge dataset

🔗 Dataset Link - ISBI-MR-Prostate-2013: Prostate MR image segmentation dataset

🔗 Dataset Link

Results & Performance

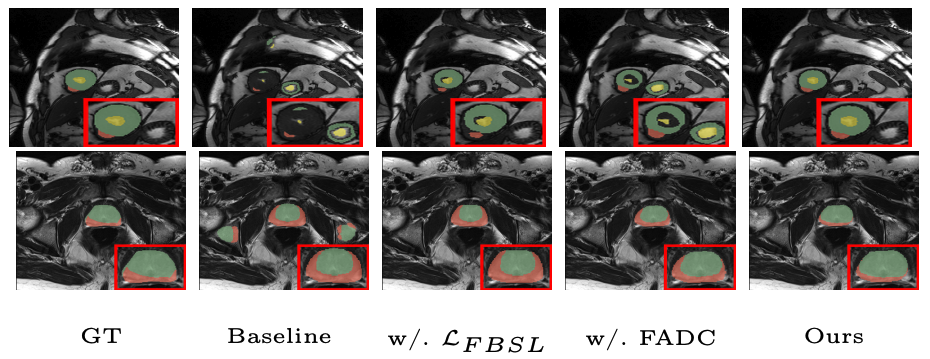

EFFDNet demonstrates competitive performance across multiple medical image segmentation benchmarks:

- ACDC Dataset: Achieves high segmentation accuracy with minimal scribble supervision

- Prostate Segmentation: Superior performance on MR prostate image segmentation tasks

- Annotation Efficiency: Significant reduction in annotation time compared to fully-supervised methods

- Feature Quality: Enhanced foreground-background discrimination in learned representations

The method's ability to achieve high-quality segmentation with minimal supervision makes it particularly valuable for medical imaging applications where expert annotations are expensive and time-consuming to obtain.

Usage & Implementation

EFFDNet is built upon the WSL4MIS open-source framework and provides a comprehensive implementation for scribble-supervised medical image segmentation:

- Repository Setup: Clone the repository and install dependencies

- Dataset Preparation: Download and prepare ACDC and ISBI-MR-Prostate datasets

- Model Training: Execute training with scribble supervision using provided scripts

- Evaluation: Test model performance on validation and test sets

The codebase includes comprehensive documentation and example scripts to facilitate easy adoption and experimentation with the proposed method.

Impact & Applications

EFFDNet addresses critical challenges in medical image segmentation by reducing annotation requirements while maintaining high segmentation quality. The framework's applications include:

- Clinical Workflow Integration: Efficient segmentation tools for clinical practice

- Research Applications: Enabling large-scale medical image analysis studies

- Educational Tools: Supporting medical imaging education with interactive segmentation

- Resource-Limited Settings: Practical solutions where expert annotation time is limited

Citation

@inproceedings{effdnet2025,

title={EFFDNet: A Scribble-Supervised Medical Image Segmentation Method with Enhanced Foreground Feature Discrimination},

author={MedVisAI Lab Research Team},

booktitle={Medical Image Computing and Computer Assisted Intervention -- MICCAI 2025},

year={2025},

organization={Springer}

}

Published in MICCAI 2025

Medical Image Computing and Computer Assisted Intervention